Loading large volume of data arnd 56k in database

sona sh, February 15, 2017 - 1:45 pm UTC

There are 56000 rows in xls format and it takes almost 7-8 hours to get loaded in to the oracle database using TOAD .

February 16, 2017 - 3:40 am UTC

loading large chunk of data in to database

sona sh, February 16, 2017 - 10:11 am UTC

Great article i would say,

But i always get this errror on loading 56k data ,

Import Data into table HR_NEW_TABLE from file C:\Users\Desktop\Org.xls . Task canceled and import rolled back. Task Canceled.

could you please shed some light on the same.

Regards

Sona

RE

GJ, February 17, 2017 - 12:26 pm UTC

Did you try what Connor asked==>(save as csv) and use sql loader.

"Import Data into table HR_NEW_TABLE from file C:\Users\Desktop\Org.xls"

"Task canceled and import rolled back. Task Canceled"

file used in Org.xls and im sure that's not a sql loader error

Loading large volume of data arnd 56k in database

sona sh, February 22, 2017 - 8:19 am UTC

the solution provided was really helpful ,

but my file data contains name columnn fields as (john,Sam)

so loading data using control file will not give relevant result .Can you please suggest something on the same.

February 23, 2017 - 2:54 am UTC

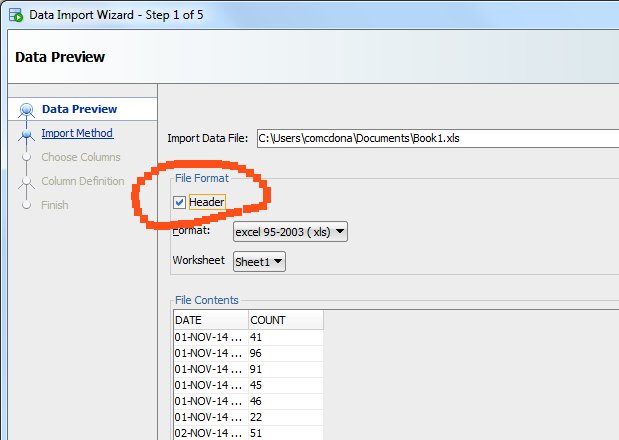

You can choose to skip header rows in the sql dev import

Loading large volume of data arnd 56k in database

sona sh, February 27, 2017 - 2:17 pm UTC

Thanks a lot for your help.